Avesha Resources / Blogs

Hybrid/Multi-Cloud

A Powerful Paradigm for Enterprises

Prasad Dorbala

Co-Founder & CPO at Avesha

Introduction

A multi-cloud or hybrid strategy gives enterprises the freedom to use the best possible cloud native services for revenue generating workloads. Many organizations are utilizing multi-cloud deployment use cases like

- Increased reliability

- Improved security

- Cloud bursting

- Disaster recovery

- Vendor neutral solution

- Utilizing differentiated solutions from providers

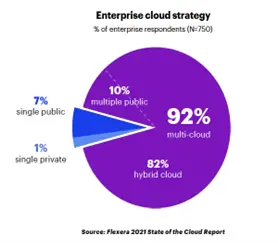

The cloud vendors provide common solutions like compute, storage and networking solutions with cost efficiencies. Organisations today consume multi-cloud solutions for enterprise IT services like email, Salesforce, PaaS from Microsoft etc. for applications. Study from Flexera shows most enterprises today on average use 5 different clouds.

Enterprises are leveraging existing contract relationships to consume IaaS/SaaS solutions to operate in multi-cloud environments.

Proliferation of Kubernetes

Enterprise applications are containerized, and Kubernetes has become the de facto standard for orchestration. The choice of Kubernetes is helping enterprises multi-cloud design patterns that are easy to adopt. Kubernetes allows builders to deploy, manage and scale with ease. With microservices and Kubernetes container orchestration, builders can improve feature velocity by decomposing microservices to smaller functional features and take advantage of the canary deployment model for fast-paced business value. This proliferation of microservices in enterprise environments leads to growth of the Kubernetes ecosystem.

Deploying and effectively managing Kubernetes at scale demands a robust design process and rigid administrative discipline. While applications are being containerized, enterprises are faced with multiple decisions with respect to various types of workload requirements including, but not limited to, highly transactional, data-centric, location- specific, workloads. The workload distribution needs to take factors into account like latency, resiliency, compliance, and cost metrics.

While enterprises are using multi-cloud for deployment of applications, they are siloed to a specific cloud per application. However, this deployment pattern is quickly changing with data integration across clouds.

Each cloud provider has a unique value proposition for workloads to consider. Let’s take an example of Oracle cloud. Oracle Cloud apart from computational capabilities, brings best-in-class database management functionality and surrounding ecosystems of analytics as well as cloud native products.

While enterprises containerize their workloads and reap the benefits of multi-cloud architectures to bolster resiliency and consume best-in-class features from cloud providers, however, multi-cloud deployment bring in various challenges like

- Scheduling and distributing applications across clusters in multi-cloud environment

- Provide application networking – Either north-south by routing traffic to right cluster or east-west by creating a network for pod-to-pod communication

- Security boundaries across application which are spread across multi-cloud and distributed globally

- Data sovereignty policies which need to be maintained with local government bodies

Mature deployments

As organisations reach maturity in running Kubernetes they expand their ecosystems to include multi cluster deployments. Multi cluster deployments are hosted in datacenters or distributed in data centers as well as in hyperscaler clouds. Some of the reasons enterprises choose to deploy multi cluster environments are for team boundaries, latency sensitivity where applications need to be closer to customers, geographic resiliency, and data jurisdiction policies involving user data restrictions in countries where data cannot cross geographical boundaries like, GDPR and California Consumer Privacy Act – CCPA. While distribution of applications across clusters increases, there is a growing need for these applications to reach back to other applications in different clusters.

Platform teams face tedious operational management challenges when faced with providing infrastructure to application developers extending the construct of namespace sameness to multi-cluster deployments, while also maintaining tenancy, governing cluster resources and cluster configuration without environmental configuration drift. Enterprises will benefit greatly by having clusters across regions and clouds with uniformity for access and resources for application deployment velocity.

Networking

In the early days of multi-cloud journey, platform teams made a conscious choice of connecting clouds using traditional firewalls, security controls and routing gear. With the growth of ephemeral application infrastructure and service to service communication paradigm in microservices, building network paths inside of the Kubernetes environment suits the agility needed for services and automation needed for deployment speed.

Kubernetes Networking

Traditional Kubernetes networking is no small feat which we have been deploying for years. While we are used to defining domains and firewalls at places where we need boundaries and control, mapping that in Kubernetes is challenging. “Yes”, you do need additional flexibility beyond the namespace. Having to connect multiple clusters let alone distributing workloads across clouds would need serious planning from NetOps teams.

Multi-cloud management platform

To effectively govern a multi-cloud environment, a platform which manages across cloud providers is essential. Some of the critical principles include defining guardrails for product teams, policies for security and ability to place application workload on demand across the footprint.

Salient use cases in multi-cloud deployments

Isolation for enterprise teams:

Each team operates multiple workloads such as services or batch jobs. These workloads frequently need to communicate with each other and have different preferential treatments. Enable isolation for this set of applications by defining sets of compute resources dedicated per team (especially GPU resources)

Single operator for multi-customer enterprise (aka SaaS provider):

B2B software vendors are increasingly providing SaaS based delivery and need tighter isolation from customer A vs. customer B. Today most delivery of such services are done by hard cluster boundaries, thus leading to cluster sprawl. Cost Optimization and operational efficiency are critical considerations.

Case for Hybrid:

Enterprises who have a data centric application in a datacenter and would like to keep data in the datacenter for compliance reasons need a hybrid/multi-cloud environment.

Cloud Bursting:

As per Flexera 2021 State of the cloud report and summarised by Accenture, 31% of enterprises are looking for hybrid solutions for workload bursting (cloud-bursting). Enterprises requiring extra capacity on-demand but need connectivity back to the data store in the datacenter, or certain web properties running in the cloud need to reach back to the datacenter for database access.

In conclusion

76% of companies are adopting multi cloud and hybrid cloud approaches – according to a report by Jean Atelsek @ 451 Research. A similar survey from Accenture, 45% of enterprises responded, ‘Data integration between clouds’ as one of the use cases for multi cloud architecture. Thus, a robust platform that supports and scales multi and hybrid cloud deployment patterns, easily and securely, is the need of the hour for enterprises.

This article was also featured by the same author on the ONUG blog page here.

Copied