Avesha Resources / Blogs

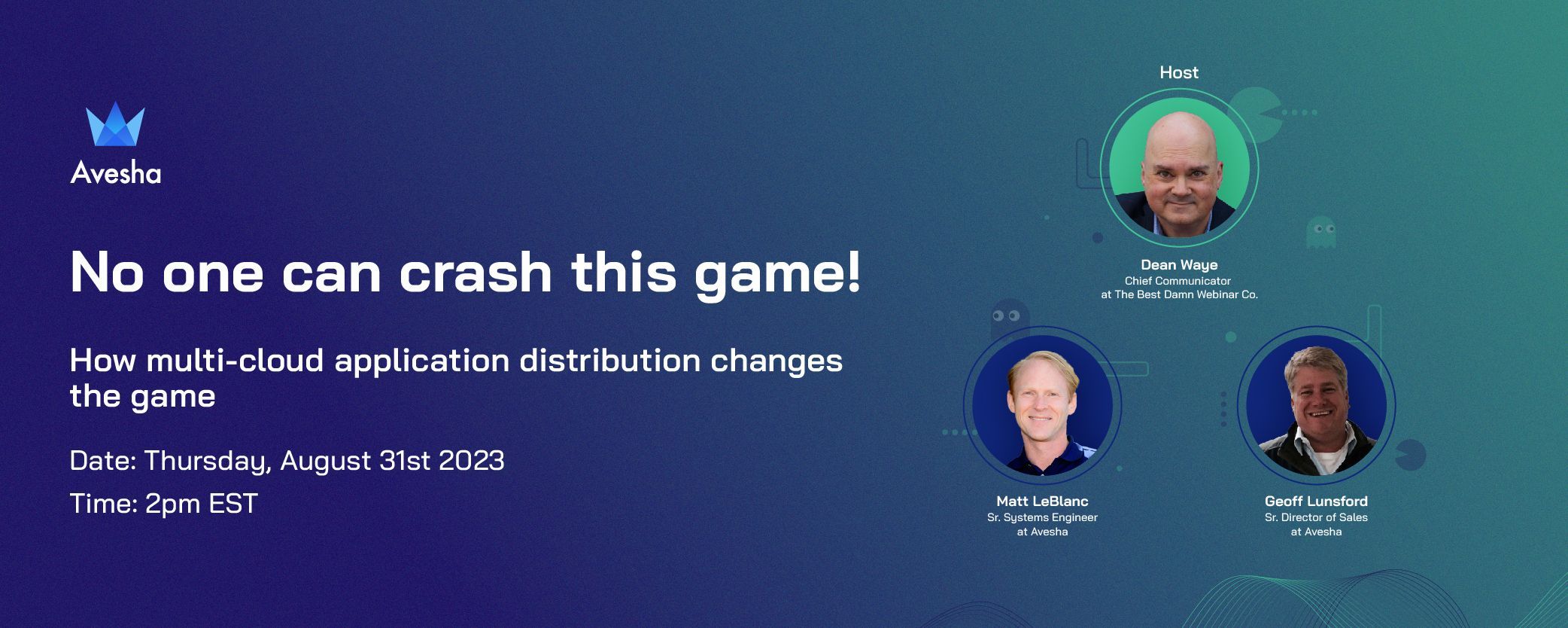

No one can crash this game!

How multi-cloud app distribution just changed the future

Avesha Blogs

Video link: https://youtu.be/A4TB0y6n7kM

Editor’s note: the following transcript has been lightly edited for clarity.

DEAN WAVE:

- Hi, and welcome, I'm Dean and I'm here today with Matt and Geoff. Pretty recently something became possible. Lots of companies already have a distributed cloud storage strategy, that's not new. What happened recently is that the problem of getting a multi-cloud application strategy, a multi-cloud application strategy that worked and that you could afford and scale, well, no, not possible. Not something that most companies could have until now. Matt's going to show, and Geoff is going to explain some use cases for this new approach where the data, the configs, the parameters, and everything the app needs to run are now durable and persistent even if the app needs to switch to a completely different cloud company in a different part of the country even in an outage, any kind of outage, planned and unplanned. And so, guys, hello, Matt and Geoff. Tell us who you are and what you're working on these days.

Matt LEBLANC:

- Sure, my name is Matt LeBlanc. I'm senior systems engineer here at Avesha. I've been in the Kubernetes space for roughly 3.5 years. I think I've been to seven KubeCon at this point and I've really been focusing in on how workloads can be brought into the Kubernetes space for the last portion of my career, Geoff?

GEOFF LUNSFORD:

- Hi, my name is Geoff Lunsford. I work with technology teams to solve networking issues in the Kubernetes ecosystem, and I've worked for hardware and software companies for over 20 years. My journey has included network infrastructure, open-source software, and data management solutions. And as these areas have actually converged, I've transitioned into the cloud-native space. Now that we have a little background on us, let's talk about who is Avesha. We are the leaders in the multi-cloud secure Kubernetes connectivity space. Our founders have deep expertise in networking, cloud technologies, AI, and DevSecOps. This team holds over 100 patents. They have started several companies representing about $7 billion in exit value including acquisitions by Cisco and Ericsson. Matt, you want to provide a quick overview of KubeSlice and the solution we're going to talk about today?

MATT LEBLANC:

- Sure, so today, we have a little demo to show KubeSlice's capabilities in providing connectivity amongst heterogeneous clusters in this case. We're going to be talking about how we can make sure that we keep our scores alive from our Pac-Man application that's running across three of those clusters.

DEAN WAVE:

- Very important.

MATT LEBLANC:

- How are we going to do this? Well, first let's talk about what KubeSlice is just so we have a little general understanding. KubeSlice is a cloud-native solution that allows you to securely connect, manage, and monitor connectivity to your clusters across any cloud and or on-prem with any flavored Kubernetes. So that means it is deployed as an operator via Helm Chart and you can use this in any environment. So that means you can use this for your EKS, AKS, GKE, OKE, Red Hat, OpenShift, Rancher. It doesn't matter, we support them all, and we are also doing this with layer three networking. What does that mean? We're not doing it at the application level where most other solutions are. We are layer three which is low latency which means a fast response for your applications, and we also do this with a flat network. So that 192.16.x, that is a non-ratable network which also adds to our security, and we don't use any NAT. That's a big feature and to add to the security, we also have encryption, right? Using OpenVPN, we're able to keep that information secure and of course, with a cloud-native solution, you'd expect a multi-tenancy support. So we have very granular role-based access control to support your connectivity. Now what does that all mean? Now essentially once you install a KubeSlice into your environment, you're able to connect your namespace. So we can connect your clusters which what we're referring to is a global namespace and that's what we call a KubeSlice or slice for short. So in the example you're going to see today, we're going to connect the MongoDB, the Mongo Database namespace for GKE, AKS, and EKS, and we're going to do all this live. Now let's talk about what it would take to do this without KubeSlice. You're going to set up your VPC peering. You're going to have to create your inbound rules, set up load balancing, configure your DNS, and as well as do your exclusions from SNAT. In addition to those management functions, you're also going to have to manage this within the politics of your own organization. So if you're trying to connect your Google Azure and AWS clouds, you're going to have to go through probably a few different teams to get that done. A lot of coordination which take a lot of time and effort as well. Geoff?

GEOFF LUNSFORD:

- So the alternative to working through each one of these steps that Matt just outlined is KubeSlice. We've simplified the deployment process so you can connect clusters together in minutes regardless of your Kubernetes distribution or where you're running it. For example, across different cloud regions, multiple cloud providers, or on any edge device. So think about it this way. No one knew the importance of sliced bread until we had sliced bread. So Matt, you want to do a little prayer to the demo gods?

MATT LEBLANC:

- Yes, may the demo God shine upon me today. Let's talk about how we have this set up. So this is a multi-application solution. Yes, it is Pac-Man, but we have Pac-Man, the stateless application, that's running individually on AKS, EKS, and GKE. We also have MongoDB cluster edition that is also running across those, and what we've done is basically set up that cluster edition so we're able to write our high scores. That's basically our database and what we're going to be visualizing today. In the demo, I'm going to kill the pod where that primary Mongo replica set is. We'll go into what that means in just a minute, but we're going to basically kill that application that simulates your cluster, or your cloud is unavailable, and then you're going to see how the failover of the database is allowed to happen to the other cluster and it's all because of the connectivity provided by KubeSlice. Why is this important? Well, I want to make it clear just because I put AWS as the outage on this first page, I'm not picking on them. All of the hyperscalers have their outages. It's not just AWS. It's Azure and it's Google. I actually like this one here because it gives you the idea of how long that duration is for those outages. Somewhere between an hour and 43 minutes to a day and four hours. So there's a lot of variation and being able to fail over without any human interaction and keep your data is going to be imperative for keeping your applications up and running and keeping that stateful set.

DEAN WAVE:

- It always feels like a long time no matter how short it is.

MATT LEBLANC:

- Oh, sure.

DEAN WAVE:

- Seconds crawl by, and I just want to clarify. When you start taking down, knocking down parts, you're going to simulate a catastrophic failure. It's not going to bounce back in 90 seconds, right? You're going to knock it all the way down.

MATT LEBLANC:

- Correct, so I'm going to go over and kill the pod that is running the database. So as you can see here, this is actually a screenshot of MongoDB, the dashboard here. And we're going to go to the live version of this, but you can see, let me grab my mouse here. Over on the right here we have that replica set to, currently it is the, you see the P. That is the primary replica set. There are two other copies of that. You see the other two are secondary with the S down in the lower logo but let me put this in terms that will make a little bit more understanding here. We're trying to keep the scores alive and the data behind that. So if we take a look here, you can now see our three replica sets. The one over on the right, which was listed as primary, that's our read-write set. The other two are read-only and basically what that means is that each instance of Pac-Man is going to be able to get a quick reference to the local copy for read only and when they do the commit, it will write to whatever the primary set is. MongoDB clustered version basically is going to manage where this fail over to is KubeSlice that is allowing the connectivity. Now as I mentioned before, each app is run locally on each cluster. The high scores are kept on that Mongo database. We have that primary replica set which is read-write, our secondary is, secondaries are read-only, and we're going to have a failover from primary to secondary if that cluster or when that cluster goes down. So let's get into it. Any questions before we go into this, Dean or Geoff?

DEAN WAVE:

- Well, you're dealing with the three hyperscalers and all of our data centers are in different parts of the country, right? There's one in, Amazon's is at the main one in Virginia, right?

MATT LEBLANC:

- Yes, let's take a look at that. So I happen to have KubeSlice Manager, right? So it is important to point out that we do, this is an open-source project, but we also have the enterprise version. So the enterprise version comes with the availability for dashboard, right? And to your point, Dean, we have each one. So we have our GCB cluster in US West. We have our Azure cluster in US West Two. Obviously, California coast, and then also our EKS cluster over in AWS which looks like it's in Virginia. Is that what you're getting at?

DEAN WAVE:

- Yeah, so you're not just knocking down, as far as the demo's concerned, you're not just knocking down one of the clusters. You're knocking down a big part of the country and it won't matter.

MATT LEBLANC:

- Yes, yeah, let's just, at some point we're going to fail a cluster and we're going to make one of these basically go away.

DEAN WAVE:

- This is the most often I've heard the words live demo and failed mentioned together in a long time, so let's see.

MATT LEBLANC:

- Let's see how this goes. All right, so before I get into it, let's talk about that database. So you saw this in the screenshot before. I just reset this lab recently, so our primary replica set you can see is on 1-0. So you can see that there's replica set zero, replica set one, and replica set two. Now if I switch over to, I'm going to switch over to Lens. Now this is a free tool that I use to manage my clusters. If you take a look here, I have three clusters. There's my EKS cluster, there's my GKE cluster, and there's my Azure cluster. And if I take a look, we can take a look that I'm looking at the pods there and you can see replica set zero is on EKS, replica set one is on Google, GKE, and replica set two is on Azure. And if I flip back to that Management screen, we can see that replica set one is the primary. So let's go back over there and replica set one is on GKE. Now let's get into the application, the game itself. I have three instances running and each one of these is running in their respective cloud, and you'll see that up here. So here's our AWS cloud, here's our GCP cloud, and here's our Azure cloud. Now in order to get some data in there and you can see here's the high score list. And if I look at each one of them, you'll see that it is the same high score list even though that application is running local. So if we play the game, I'm going to play this very, very shortly. I'm not here to get the high score. I'm really here just to get a number up on the board and let's go have one more Pinky death. There we go, and let's see. Let's timestamp this, 2:13 PM on the AWS cloud. Hit Save, and we can see our high score has been updated. We're going to do those two more times and again, I'm not going to play this long just to get some data on there. Oh, yeah, I still got the sound on there.

DEAN WAVE:

- I wanted the sound on. That's part of the game.

MATT LEBLANC:

- I kind of missed the waka-waka of my childhood. All right, so now we have another score. We got 2:13 PM on GCP. Save, high score list, right? We can see that's clearly working one more score. Let's add to it.

DEAN WAVE:

- Please turn the sound on this time for the whole thing.

MATT LEBLANC:

- All right, I'll turn it on.

GEOFF LUNSFORD:

- Absolutely.

DEAN WAVE:

- There we go, we got the sound.

GEOFF LUNSFORD:

- Do you remember when this was good graphics?

DEAN WAVE:

- It's still good graphics.

GEOFF LUNSFORD:

- Is it?

DEAN WAVE:

- This is classic, my man. This will never go out of style.

MATT LEBLANC:

- All right, so now 2:14 PM on Azure. So this is called showing your work, right? You see me, I have some data in there. I've added a few more entries over to the high score list. Now let's break something. So I am going to go over to my Lens management console and remember we were running GKE, right? I'm sorry, GKE is running that primary replica set, right? 1-0, now I could simply delete this pod, but as a function of Kubernetes it'll just come back, right? Kubernetes is resilient, it has the ability to recover. Before I do that, I'm going to basically temporarily cordon off, disable the scheduling so it does not just bring that application back up again. So now that I have scheduling disabled, I can go over and delete the pod.

GEOFF LUNSFORD:

- Kapow.

MATT LEBLANC:

- Boom, all right, so now we are going to set our clock. I have the clock app. I know it's going to take roughly a minute and a half give or take and we're basically going to wait to see how that happens. I will get a notification. You see the timer up here. We are live without a net. Now let's go over and take a look at our KubeSlice, I'm sorry, our MongoDB manager. You'll see even if I refresh this, it still hasn't really gone down yet. It will take a moment. Now what you're going to see here is how KubeSlice has provided the connectivity and it's not just that we are connecting those clusters. We're only connecting those use spaces and let me show you something that's a little bit more interesting here. I'm going to go with the host mappings. What is interesting, there's our replica sets. Notice they're using a 192 address. That is how they're communicating. So when we say it is a flat network, that is how these three replicas sets of Mongo are communicating, let's go back.

GEOFF LUNSFORD:

- So they all feel like they're on the same network?

MATT LEBLANC:

- They all think they're on the same network. We basically encapsulated, created that VPN tunnel and they're using that 192 network to communicate. It's easy to manage, it's easy to set up, and it is also secure, right? So one of the downsides of doing this without KubeSlice is essentially you would have to open up ports to connect the two clusters and the challenge of that is it creates what we refer to as the noisy neighbor scenario which means if you connect cluster A to cluster B, everything in cluster A has access to everything in cluster B which could be a problem. All right, so there's my little alarm. Let's go see how we are doing here. Going to go back and, oh, yes, I didn't even have to refresh. You can see cluster 1-0 is now down and we have now failed over to the replica set 2-0. So let's see if this still works. So let's go over to our Azure. That's where 2.0 or the replica set two was working. We can see our high scores list as we saw. Here's our three examples, and let's hit-

DEAN WAVE:

- Already saved before the cluster went down, yep.

MATT LEBLANC:

- Yep, and let's hit play. Oh, no, let's turn on the waka-waka.

GEOFF LUNSFORD:

- I'm interested in the high score. Is it going to show up now that that instance is down? If I had gotten the high score?

MATT LEBLANC:

- I'm sorry, what was that Geoff?

GEOFF LUNSFORD:

- I'm interested in whether my high score is going to be saved. Is it going to show up in the other instance?

MATT LEBLANC:

- All right, so let's go. This is on Azure, save, and go to that high score. All right, and we'll go back here and view high scores, back, new game, play. I got to get enough score to get on there. Oh, I should not have taken the power pellet. I should have wait for them to turn back again. All right, and here we go. And one more time. And we got 2:18 PM on our AWS cluster, save, and there we go. So we've now managed to do that failover using KubeSlice to keep that connectivity. All right, Geoff, why don't you tell a little bit more about the other use cases that apply here?

DEAN WAVE:

- Besides Pac-Man.

GEOFF LUNSFORD:

- Yeah, I mean, yeah, Pac-Man. So we've been running into a number of different use cases when we're talking to enterprise customers. As you look at them, the hybrid cloud or burst to cloud edge deployments is one of them that we see a lot. Multi-cloud database resiliency which you just talked about. Application isolation and zero trust. Zero recovery time objective or zero RTO. We also have real-time cluster availability or sorry, observability and application migration. So I'm going to walk through these fairly quickly so you can just get an idea of what we're seeing out in the marketplace. We're happy to dive into these in more technical detail if you'd like, but you can deploy a slice across any Kubernetes distribution. Each slice creates a single global namespace for an application in your environment. You can run across data centers, cloud regions, multiple clouds, or even edge deployments eliminating all the hard work that you would have to do on the left side of the slide while all getting all the goodness on the right side built into the solution. So when we look at use case two, we're using that same slice or secure data path. We can deploy multi-cluster or multi-cloud stateful applications on Kubernetes. We can run these in active-active deployments and have them up and running in minutes and be fully ephemeral. This works with a lot of different technologies. So it could be things like Couchbase, MongoDB, Redis, Cockroach. We actually had a customer that wanted to run Keycloak in an active-active deployment for the single sign-on and do that safely on OpenShift. So that was something that we're able to do with a slice. It doesn't matter what the technology is. When you go to the use case three, we're using that same data path. So we're using the same technology to achieve all of these different use cases. This is what we call zero recovery time objective and what we're doing is working with any popular data management platforms in the Kubernetes ecosystem. Using that KubeSlice, we can create a fully redundant system by including a replica in another cluster that could be in a different availability zone or on another cloud. In use case four, we're using that slice to create application isolation or guardrails to help with a zero-trust initiative. We can secure your deployment, limit blast radius, and from application or application across your environment, this could be something like test, dev, and production, right? It could be a multi-tenant application, a banking app, for example, where you want to separate your customers, and this limits your blast radius. When you talk about use case five, telemetry data, right? We can take all the telemetry data that's created in that slice across all of those clusters, and we can feed it into something like Prometheus or another monitoring solution if you want. By doing this, we can create real-time observability reports for the entire application, even if that cluster is spanning from data center to data center or multiple hyperscalers and even if it includes different distributions of Kubernetes. So when you look at use case six, this will be our last use case because it seems to be one of the most popular, and it's one that we're going to focus on in our next webinar. But because we're running that layer three virtual private network, we can seamlessly migrate any container to application from cluster to cluster, or from data center to cloud, or cloud to cloud. So you can migrate applications seamlessly without lift and shift. So why do you want to do this now? You need to build a redundancy and disaster recovery into your cloud-native strategy. The complexity, the time to deploy, and the human capital required in the past are significant barriers. With KubeSlice, Avesha is an all-in-one solution. You can be up and running today saving your organization months of time, human capital, and lots of headaches. So if you want to try KubeSlice in your environment, here's a link to a 30-day trial license. Our team is happy to assist you with any questions about setting up KubeSlice or diving into potential deployments in more technical detail if that's your requirement. So let's see if there's any questions.

DEAN WAVE:

- Yeah, I've got a handful actually that have been provided. So well, someone mentioned a couple slides back I guess you showed service mesh and I mean, it's a pretty blunt question. Why do I need this? I already have service mesh set up.

GEOFF LUNSFORD:

- Matt, you want to take that one.

MATT LEBLANC:

- Yeah, we are not here to replace your service mesh. This really provides your application connectivity. Service mesh really has a different function and we're not here to replace or displace it.

DEAN WAVE:

- Okay, so in addition to basically?

MATT LEBLANC:

- Yes.

DEAN WAVE:

- Okay, can I mix together different setups like Couchbase on AWS, Reddit, Redis, Reddit, see this is where my brain's going. Redis on Azure, different countries, things like that?

MATT LEBLANC:

- Yeah, I mean, one of the main advantages here is that you're able to leave the data where it's created and where it's being managed, and have other applications make reference to it. But you still have the security without having to create egress and the ingress-egress and make all adjustments to your firewalls and your VPC. So it's much easier to manage, allows you to take advantage of that data gravity where you may have a cloud or a cluster that has a lot of important information, and you have another cloud or cluster in a different cloud elsewhere. You now have access to that data and doing it in a secure and high-performance fashion.

DEAN WAVE:

- Okay, Geoff, I think this one is for you. Are there any use cases or anyone who should not expect to see much benefit from KubeSlice?

GEOFF LUNSFORD:

- Wow, we think that it's a great platform to start out your Kubernetes journey because it does provide you that security. It provides you a way to start building your applications and then seamlessly migrate into a multi-cloud strategy, give you that redundancy, provide a DR facility. So if you start building there, it's great, right? I don't see a use case where it doesn't really fit in. We've even talked to people who are running mainframe and they're starting to do transformation on the mainframe. and looking at running Kubernetes there, you could connect a slice to your mainframe and to your Kubernetes cluster potentially. So there's all sorts of ways that this can be used. Matt and I have kind of joked about this, that we haven't even seen the breadth of use cases. Every day we discover something new, it's crazy.

DEAN WAVE:

- Okay, and then-

GEOFF LUNSFORD:

- It's a new tool available in your toolbox and as Geoff had pointed out, we haven't even begun to discover all of the use cases that would make KubeSlice an advantage for our enterprise customers.

DEAN WAVE:

- Okay, and then I had one question that I kept all the way through. I just wanted to be clear about something. So our demo showed like three hyperscalers, right? In three different parts of the country. There's no reason why I couldn't use two, no reason why I couldn't use 10, no reason why I couldn't have them spread around the globe. Or is there, I legitimately don't know.

GEOFF LUNSFORD:

- So we work with Cox Communications on their Cox Edge platform, and they have 30 different data centers. So if you're spinning up an application in that environment and you want to have two, three, four, you want to seamlessly add additional data centers as usage and location becomes an important factor for the application of the data that you're running. We can do that seamlessly and you can add clusters or take them away as needed, and this translates to a lot of different edge cases that are coming online, especially when you start thinking about running AI and running that closer to the edge to cut down on cost of egress and ingress.

MATT LEBLANC:

- So keeping your data on the edge is one use case or you could think about your data governance where data needs to reside within the country that's being managed, but-

DEAN WAVE:

- More and more common these days.

MATT LEBLANC:

- It may need to live let's say in Canada, but you could allow your clusters in other locations to have access to it in a secure fashion. Where the data still lives in Canada in this example, you can access it from a cluster in a different region or a different country.

GEOFF LUNSFORD:

- You can also think about retailers out there where they might have 2,000 locations and they're running Kubernetes now in their office as a kind of a data platform for the store, collecting a bunch of telemetry data and pulling that back.

MATT LEBLANC:

- Let's just say the hardware store with the, the one with the blue logo or the one with the orange logo, they're all running K3S at the local retail, and they're also running a large cluster that is in one of the hyperscalers. And for that scenario it would allow them to have easy secure connectivity for data protection. Just imagine, my guess is, and I'm not trying to pick on Florida but they're currently being hit by a hurricane, and it is not uncommon for one of those hardware stores to lose a store to three feet of water. So this would be an easy way for you to connect to the mothership in the hyperscale and make sure that you have all of your retail data from today's sales before your K3S little server is under water.

DEAN WAVE:

- Right, in the closet in the back room of the warehouse, yeah, okay. All right, that was good. We kind of promised when we set this up originally that we'd take about 30 minutes and it's been about 30 minutes. So just I'm Dean. Thanks very much for joining. Thanks very much to Geoff and to Matt. Wish Pac-Man could have gone on a bit longer, but I understand we have time constraints and thank you for playing the sound. I really appreciated that. I think it added a lot to the demo actually.

MATT LEBLANC:

- Thank you, we appreciate the time and opportunity to talk a little bit about KubeSlice.

DEAN WAVE:

- That was fun, all right. We'll be back next month with another episode. In the meantime, thanks, everyone. Have a good day, bye.

Copied