Avesha Resources / Blogs

7 Considerations for Multi-Cluster Kubernetes

Ray Edwards

VP - NorthEast Sales, Avesha

In the IT space today, customers often intermix Multi Cloud and Hybrid Cloud terms without necessarily understanding the distinction between them.

A hybrid cloud is a cloud computing environment that combines public and private (typically on-premise) clouds, allowing organizations to utilize the benefits of both. In a hybrid cloud, an organization can store and process critical data and applications in its private cloud, while using the public cloud for non-sensitive data, such as testing and development.

The hybrid cloud model is becoming increasingly popular among organizations because it enables them to optimize their IT infrastructure, while keeping costs under control. Additionally, hybrid cloud environments can provide a more seamless and integrated user experience, with the ability to move workloads between public and private clouds based on business needs.

Multi-cloud on the other hand, is a setup that involves the use of multiple cloud computing platforms from different vendors. This approach enables organizations to use the best cloud services and features from different providers to create a more optimized and customized IT environment.

Both these approaches provide IT groups with flexibility and certain benefits.

In a multi-cloud environment, an organization can leverage the strengths of different cloud providers, such as AWS, Azure, Google Cloud, and others, to achieve a range of benefits such as increased scalability, flexibility, resilience, and cost-effectiveness. Multi-cloud also enables businesses to avoid vendor lock-in and achieve greater redundancy, as data and applications can be distributed across multiple cloud platforms.

Hybrid cloud for its part, enables businesses to have greater flexibility in their IT infrastructure, allowing them to leverage the scalability and cost-effectiveness of the public cloud for non-critical workloads, while keeping sensitive data and applications within their own private cloud, which provides greater control, security, and compliance.

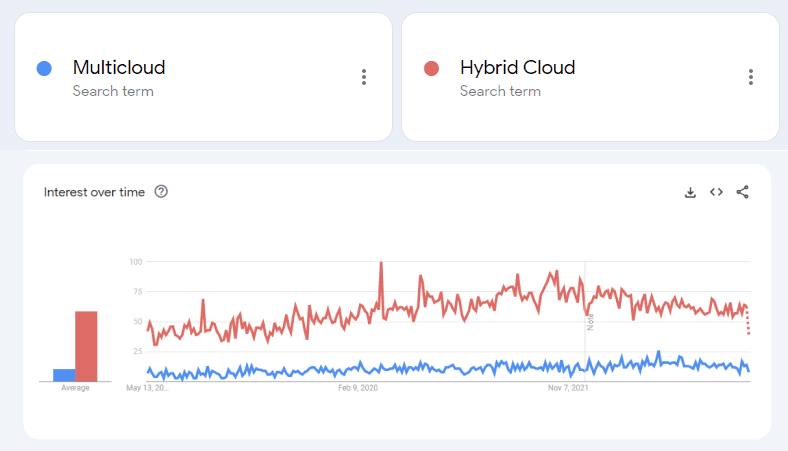

Google Internet searches of Hybrid Cloud and Multi Cloud

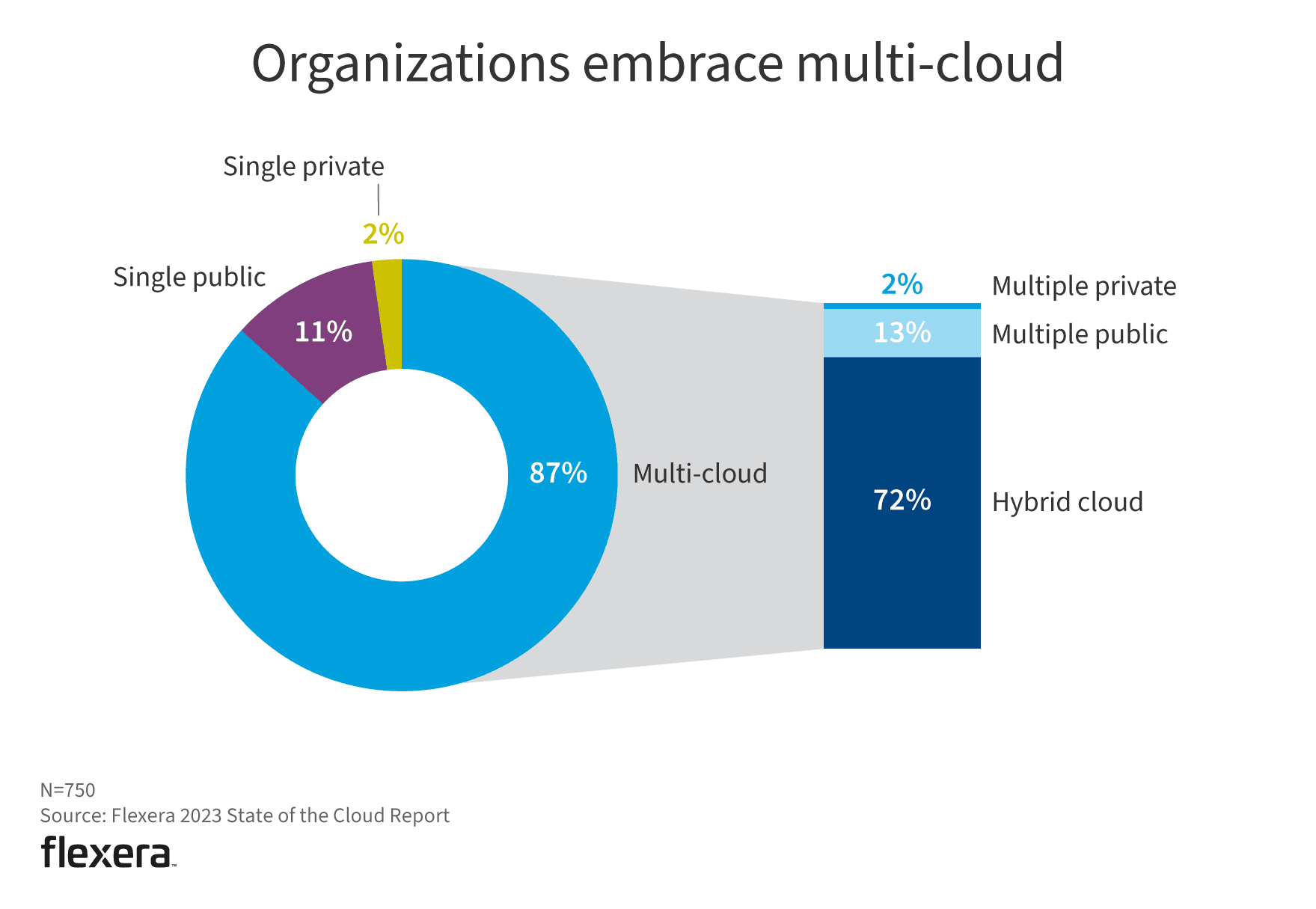

Hybrid Cloud and Multi Cloud adoption is growing rapidly. Flexera 2023 State of the Cloud Report highlights the fact that a vast majority of enterprises have adopted a hybrid cloud model, and almost 87% have a Multi Cloud approach.

While both of these approaches have inherent benefits, this blog raises a number of challenges that organizations should consider in advance, along with some possible solutions.

Complexity of Cloud orchestration

Managing multiple cloud environments, each with its own unique nuances, is a significant challenge. Even Kubernetes, which was envisioned as a way to abstract away from infrastructure dependency, is implemented differently by different cloud providers. EKS, AKS, Rancher, Mirantis Tanzu, Openshift are only a few of the major distributions of Kubernetes (and managed Kubernetes) that IT leaders have to contend with. Each one has some specific configuration that presents a challenge when moving workload from one platform to another. Even if one decides to deploy the same distribution, say a private Rancher cluster, onto all the platforms, the end result is the headache of now having to get into business of managing Kubernetes platforms across providers. You could farm that role out to one of the multi-cloud management providers, but they too have their own deficiencies.

This approach also shifts dependance from one vendor to another, but nevertheless, presents the very reason to move to multi-cloud, that of avoiding a single vendor lock-in.

A novel approach that is emerging, and something that IT leaders should consider is the idea of virtualizing applications themselves. By virtualizing or isolating at the application or microservice (namespace) level; users free themselves from the underlying platform, or even Kubernetes distribution, and can instead run the application or microservice seamlessly anywhere.

Interoperability

Aside from the orchestration itself, IT managers must also contend with application interoperability across their deployments. This is where the cloud provider’s interest and the customers interest don’t always align.

AWS for example, wants to provide a service to its customers as the most cost effective and efficient way possible. They will frequently customize the service, say a Redis database, to run efficiently on AWS infrastructure. This hyper-customization can provide a competitive advantage to AWS vs. other platform but also has a downside.

Customers who deploy on this hyper-customized version on Redis may find that their database is no longer easily ported out to say Azure cache (Azure’s deployment of Redis). Once again, the customer may find themselves back where they started - ie locked in to a specific provider.

So the challenge the customers must solve is how to ensure data applications in different cloud environments be distributed and synchronized?

Data Portability

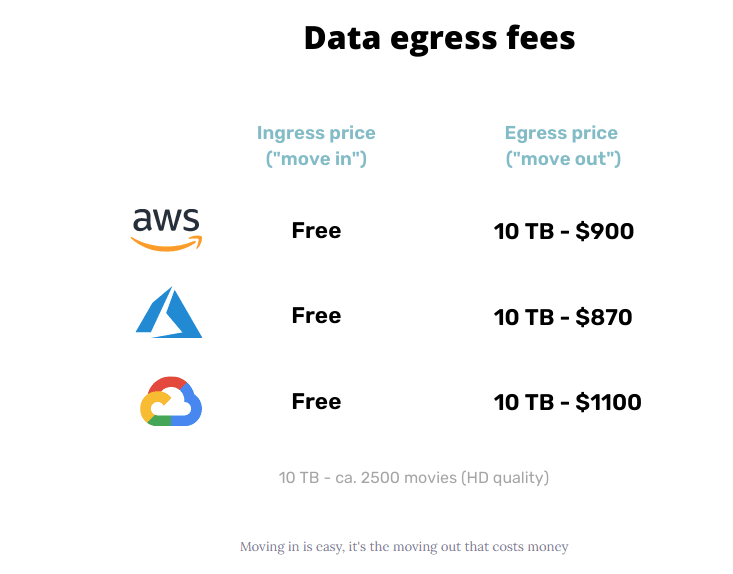

There is another nasty little surprise that hits some customers when it comes to their data. Cloud providers make it fairly easy to upload data into their platform, most times with no extra fees, but if a customer wants to move data out of the platform, they can get hit with hefty ‘data egress’ fees. This ‘data tax’ can be quite expensive depending on the amount of data that is moved out.

This is better known as the “Hotel California Effect” [ You can checkout anytime you want, but you can never leave]

Security & Governance

Of course, security and governance concern is paramount to any application deployment. The situation is compounded manyfold when leaders have to plan for hybrid cloud and multi cloud deployments.

A thorough discussion around security and governance for hybrid cloud and multi-cloud deployments will be covered in a future blog, but for now customers should consider a few items as essential to ensure success.

- Encryption of all traffic

- Monitoring and audits of clusters and nodes

- Locking down and security end-points

- Vulnerability scanning and regular patching

- Enforce zero Trust security posture

The steps above help provide security at the platform level or at the cluster level but practitioners need to pay particular attention to individual application level security issues.

Since a single cluster may host many applications, it will also likely have multiple namespaces set up within the cluster. Each application may have its own set of security policies and user access that need to be enforced. Tools such as Kubeslice are indispensable for this, by allowing managers to set namespace level policies and propagate these seamlessly across all platforms where the slice (namespace) is running.

Resource Optimization

One major reason companies cloud deployments is to reduce operating costs; by reducing or eliminating on-premises infrastructure. But a multi-cloud environment can easily become a costly exercise without effective management.

My previous blog covered cost optimization considerations for Kubernetes clusters in depth. In Multi Cloud environments, customers may find that a single autoscaling process does not work the same way for every cloud vendor. This is yet another reason to consider an automated tool such as SmartScaler. In addition to right sizing the Kubernetes deployments, SmartScaler utilizes Reinforced Learning to understand the specific characteristics of each cloud provider’s autoscaling process and optimizes the deployment accordingly.

Talent shortage

One reason enterprises are hesitant about hybrid cloud or multi cloud setups is the lack of skills in such projects. There is dearth of architects who have the expertise and deep know how to properly manage and run multicloud or hybrid environments. Business leaders have to find and retain these practitioners before embarking on a hybrid journey.

Resiliency

It is rather surprising how many businesses migrate workloads to the cloud without really planning for resiliency. Any simple search will provide multiple instances where one of the major cloud providers suffered outages that impacted businesses. Simply putting one's trust in the distributed cloud instance does not protect an application. Businesses should implement a resiliency strategy that includes load balancers that route traffic to an active cluster seamlessly in cases of failure.

Since cloud vendors are not going to encourage workloads to be routed to a competitor, IT leaders should implement solutions such as Kubeslice that abstracts the workload from the underlying infrastructure, ensuring the specific application is always available via intelligent routing.

It is also important not to over engineer resiliency to the other extreme.

Some IT leaders leave it to the individual application teams to craft their own disaster recovery strategy. This can lead to multiple conflicting DR setups which in turn introduce more complexity into the environment.

At an enterprise level, businesses should adopt a Cloud Native Disaster Recovery strategy that provides a baseline for Kubernetes applications and databases, and allow individual teams to tweak it to their specific needs.

| Focus Items | Traditional Disaster Recovery approach | Cloud Native Disaster Recovery approach |

|---|---|---|

| Failure detection and trigger | Human | Fully autonomous |

| DR actions / Procedure | A mixture of human action and automation | Fully Automated |

| Recovery Time Objective (RTO) | From Minutes to hours | Near zero |

| Recovery Point Objective (RPO) | From zero to hours | Zero |

| Process Owner | Mostly Storage team | Application itself / team |

| Technical components | From Storage products (backups, volume, sync) | From Networking products, (east west communication, global load balancer) [Note: Kubeslice enables adoption of this approach for all K8s apps] |

Copied